import xarray as xr

import s3fs

import cartopy.crs as ccrs

from matplotlib import pyplot as plt

import earthaccess

from earthaccess import Auth, DataCollections, DataGranules, Store

%matplotlib inline- Tutorials

- Dataset Specific

- SWOT

- Access & Visualization

- SWOT Oceanography

- Cloud

From the PO.DAAC Cookbook, to access the GitHub version of the notebook, follow this link

Note: This notebook uses Version C (2.0) of SWOT data that was available at the time of this notebook’s development. The most recent data is now available as Version D for SWOT collections. The last Version C measurement will be until May 3rd, 2025. The first Version D measurement starts on May 5th, 2025.

Access SWOT L2 Oceanography Data in AWS Cloud

Summary

This notebook will show direct access of PO.DAAC archived products in the Earthdata Cloud in AWS Simple Storage Service (S3). In this demo, we will showcase the usage of SWOT Level 2 Low Rate products from Version C of the data, aka 2.0:

- SWOT Level 2 KaRIn Low Rate Sea Surface Height Data Product - shortname

SWOT_L2_LR_SSH_2.0 - SWOT Level 2 Nadir Altimeter Interim Geophysical Data Record with Waveforms - SSHA Version C - shortname

SWOT_L2_NALT_IGDR_SSHA_2.0- This is a subcollection of the parent collection:

SWOT_L2_NALT_IGDR_2.0

- This is a subcollection of the parent collection:

- SWOT Level 2 Radiometer Data Products - overview of all

We will access the data from inside the AWS cloud (us-west-2 region, specifically) and load a time series made of multiple netCDF files into a single xarray dataset.

Requirement:

This tutorial can only be run in an AWS cloud instance running in us-west-2 region.

This instance will cost approximately $0.0832 per hour. The entire demo can run in considerably less time.

Learning Objectives:

- authenticate for

earthaccessPython Library using your NASA Earthdata Login - access DAAC data directly from the in-region S3 bucket without moving or downloading any files to your local (cloud) workspace

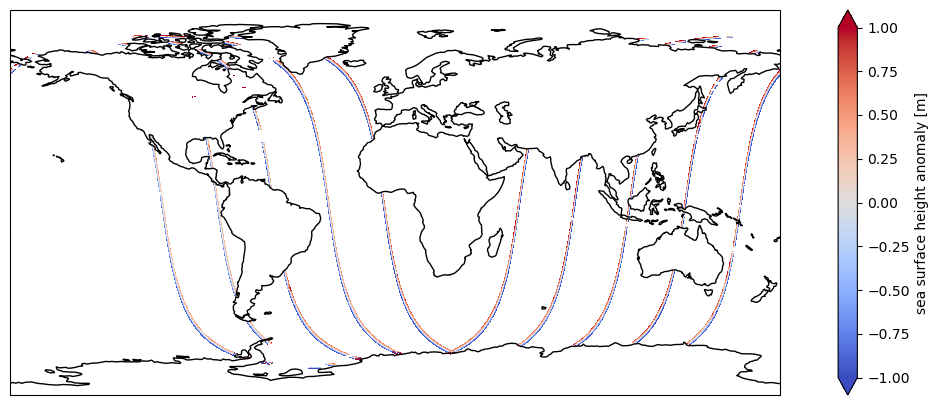

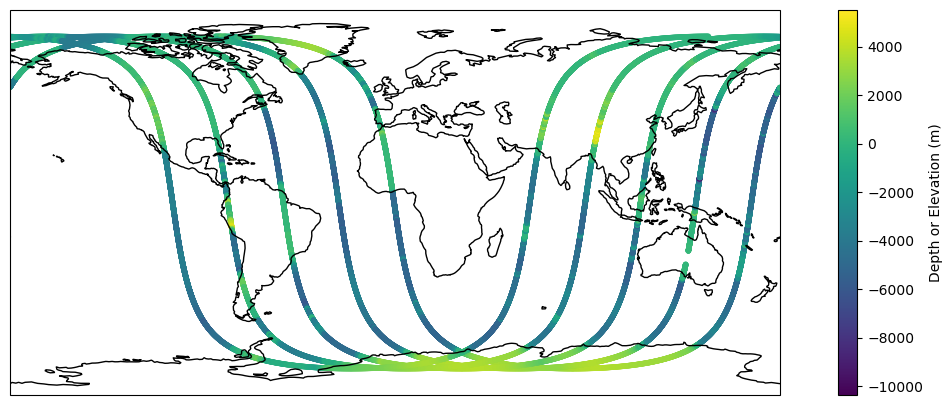

- plot the first time step in the data

Note: no files are being downloaded off the cloud, rather, we are working with the data in the AWS cloud.

Libraries Needed:

Earthdata Login

An Earthdata Login account is required to access data, as well as discover restricted data, from the NASA Earthdata system. Thus, to access NASA data, you need Earthdata Login. Please visit https://urs.earthdata.nasa.gov to register and manage your Earthdata Login account. This account is free to create and only takes a moment to set up. We use earthaccess to authenticate your login credentials below.

1. SWOT Level 2 KaRIn Low Rate Sea Surface Height Data Product

Outlined below is a map of the different KaRIn Data Products we host at PO.DAAC and their sub collections, and why you may choose one over the other. For more information, see the SWOT Data User Handbook.

Once you’ve picked the dataset you want to look at, you can enter its shortname or subcollection below in the search query.

Access Files without any Downloads to your running instance

Here, we use the earthaccess Python library to search for and then load the data directly into xarray without downloading any files. This dataset is currently restricted to a select few people, and can only be accessed using the version of earthaccess reinstalled above. If zero granules are returned, make sure the correct version ‘0.5.4’ is installed.

Open with xarray

The files we are looking at are about 11-13 MB each. So the 10 we’re looking to access are about ~100 MB total.

Opening 10 granules, approx size: 0.32 GB

using endpoint: https://archive.swot.podaac.earthdata.nasa.gov/s3credentials<xarray.Dataset> Size: 4GB

Dimensions: (num_lines: 98660, num_pixels: 69,

num_sides: 2)

Coordinates:

latitude (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

longitude (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

latitude_nadir (num_lines) float64 789kB dask.array<chunksize=(9866,), meta=np.ndarray>

longitude_nadir (num_lines) float64 789kB dask.array<chunksize=(9866,), meta=np.ndarray>

Dimensions without coordinates: num_lines, num_pixels, num_sides

Data variables: (12/98)

time (num_lines) float64 789kB dask.array<chunksize=(9866,), meta=np.ndarray>

time_tai (num_lines) float64 789kB dask.array<chunksize=(9866,), meta=np.ndarray>

ssh_karin (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

ssh_karin_qual (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

ssh_karin_uncert (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

ssha_karin (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

... ...

swh_ssb_cor_source (num_lines, num_pixels) float32 27MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

swh_ssb_cor_source_2 (num_lines, num_pixels) float32 27MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

wind_speed_ssb_cor_source (num_lines, num_pixels) float32 27MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

wind_speed_ssb_cor_source_2 (num_lines, num_pixels) float32 27MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

volumetric_correlation (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

volumetric_correlation_uncert (num_lines, num_pixels) float64 54MB dask.array<chunksize=(9866, 69), meta=np.ndarray>

Attributes: (12/62)

Conventions: CF-1.7

title: Level 2 Low Rate Sea Surfa...

institution: CNES

source: Ka-band radar interferometer

history: 2024-02-03T22:27:17Z : Cre...

platform: SWOT

... ...

ellipsoid_semi_major_axis: 6378137.0

ellipsoid_flattening: 0.0033528106647474805

good_ocean_data_percent: 76.4772191457865

ssha_variance: 0.4263933333980923

references: V1.2.1

equator_longitude: -5.36- num_lines: 98660

- num_pixels: 69

- num_sides: 2

- latitude(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- latitude (positive N, negative S)

- standard_name :

- latitude

- units :

- degrees_north

- valid_min :

- -80000000

- valid_max :

- 80000000

- comment :

- Latitude of measurement [-80,80]. Positive latitude is North latitude, negative latitude is South latitude.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - longitude(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- longitude (degrees East)

- standard_name :

- longitude

- units :

- degrees_east

- valid_min :

- 0

- valid_max :

- 359999999

- comment :

- Longitude of measurement. East longitude relative to Greenwich meridian.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - latitude_nadir(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- latitude of satellite nadir point

- standard_name :

- latitude

- units :

- degrees_north

- quality_flag :

- orbit_qual

- valid_min :

- -80000000

- valid_max :

- 80000000

- comment :

- Geodetic latitude [-80,80] (degrees north of equator) of the satellite nadir point.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - longitude_nadir(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- longitude of satellite nadir point

- standard_name :

- longitude

- units :

- degrees_east

- quality_flag :

- orbit_qual

- valid_min :

- 0

- valid_max :

- 359999999

- comment :

- Longitude (degrees east of Grenwich meridian) of the satellite nadir point.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray

- time(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- time in UTC

- standard_name :

- time

- calendar :

- gregorian

- tai_utc_difference :

- 37.0

- leap_second :

- 0000-00-00T00:00:00Z

- units :

- seconds since 2000-01-01 00:00:00.0

- comment :

- Time of measurement in seconds in the UTC time scale since 1 Jan 2000 00:00:00 UTC. [tai_utc_difference] is the difference between TAI and UTC reference time (seconds) for the first measurement of the data set. If a leap second occurs within the data set, the attribute leap_second is set to the UTC time at which the leap second occurs.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - time_tai(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- time in TAI

- standard_name :

- time

- calendar :

- gregorian

- tai_utc_difference :

- 37.0

- units :

- seconds since 2000-01-01 00:00:00.0

- comment :

- Time of measurement in seconds in the TAI time scale since 1 Jan 2000 00:00:00 TAI. This time scale contains no leap seconds. The difference (in seconds) with time in UTC is given by the attribute [time:tai_utc_difference].

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssh_karin(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height

- standard_name :

- sea surface height above reference ellipsoid

- units :

- m

- quality_flag :

- ssh_karin_qual

- valid_min :

- -15000000

- valid_max :

- 150000000

- comment :

- Fully corrected sea surface height measured by KaRIn. The height is relative to the reference ellipsoid defined in the global attributes. This value is computed using radiometer measurements for wet troposphere effects on the KaRIn measurement (e.g., rad_wet_tropo_cor and sea_state_bias_cor).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssh_karin_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for sea surface height from KaRIn

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_ssh_delta suspect_large_ssh_std suspect_large_ssh_window_std suspect_beam_used suspect_less_than_nine_beams suspect_ssb_out_of_range suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_ssb_not_computable degraded_media_delays_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_ssb_missing bad_radiometer_corr_missing bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 64 128 256 512 1024 2048 4096 8192 32768 65536 131072 262144 524288 16777216 33554432 134217728 268435456 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 4212113375

- comment :

- Quality flag for sea surface height from KaRIn in ssh_karin variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssh_karin_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height anomaly uncertainty

- units :

- m

- valid_min :

- 0

- valid_max :

- 60000

- comment :

- 1-sigma uncertainty on the sea surface height from the KaRIn measurement.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssha_karin(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height anomaly

- units :

- m

- quality_flag :

- ssha_karin_qual

- valid_min :

- -1000000

- valid_max :

- 1000000

- comment :

- Sea surface height anomaly from the KaRIn measurement = ssh_karin - mean_sea_surface_cnescls - solid_earth_tide - ocean_tide_fes – internal_tide_hret - pole_tide - dac.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssha_karin_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height anomaly quality flag

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_ssh_delta suspect_large_ssh_std suspect_large_ssh_window_std suspect_beam_used suspect_less_than_nine_beams suspect_ssb_out_of_range suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_ssb_not_computable degraded_media_delays_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_tide_corrections_missing bad_ssb_missing bad_radiometer_corr_missing bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 64 128 256 512 1024 2048 4096 8192 32768 65536 131072 262144 524288 16777216 33554432 67108864 134217728 268435456 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 4279222239

- comment :

- Quality flag for the SSHA from KaRIn in the ssha_karin variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssh_karin_2(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height

- standard_name :

- sea surface height above reference ellipsoid

- units :

- m

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- -15000000

- valid_max :

- 150000000

- comment :

- Fully corrected sea surface height measured by KaRIn. The height is relative to the reference ellipsoid defined in the global attributes. This value is computed using model-based estimates for wet troposphere effects on the KaRIn measurement (e.g., model_wet_tropo_cor and sea_state_bias_cor_2).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssh_karin_2_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for sea surface height from KaRIn

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_ssh_delta suspect_large_ssh_std suspect_large_ssh_window_std suspect_beam_used suspect_less_than_nine_beams suspect_ssb_out_of_range suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_ssb_not_computable degraded_media_delays_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 64 128 256 512 1024 2048 4096 8192 32768 65536 131072 262144 524288 16777216 33554432 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 3809460191

- comment :

- Quality flag for sea surface height from KaRIn in ssh_karin_2 variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssha_karin_2(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height anomaly

- units :

- m

- quality_flag :

- ssha_karin_2_qual

- valid_min :

- -1000000

- valid_max :

- 1000000

- comment :

- Sea surface height anomaly from the KaRIn measurement = ssh_karin_2 - mean_sea_surface_cnescls - solid_earth_tide - ocean_tide_fes – internal_tide_hret - pole_tide - dac.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ssha_karin_2_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea surface height anomaly quality flag

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_ssh_delta suspect_large_ssh_std suspect_large_ssh_window_std suspect_beam_used suspect_less_than_nine_beams suspect_ssb_out_of_range suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_ssb_not_computable degraded_media_delays_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_tide_corrections_missing bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 64 128 256 512 1024 2048 4096 8192 32768 65536 131072 262144 524288 16777216 33554432 67108864 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 3876569055

- comment :

- Quality flag for the SSHA from KaRIn in the ssha_karin_2 variable

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - polarization_karin(num_lines, num_sides)objectdask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- polarization for each side of the KaRIn swath

- comment :

- H denotes co-polarized linear horizontal, V denotes co-polarized linear vertical.

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type object numpy.ndarray - swh_karin(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- significant wave height from KaRIn

- standard_name :

- sea_surface_wave_significant_height

- units :

- m

- quality_flag :

- swh_karin_qual

- valid_min :

- 0

- valid_max :

- 15000

- comment :

- Significant wave height from KaRIn volumetric correlation.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - swh_karin_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for significant wave height from KaRIn.

- standard_name :

- status_flag

- flag_meanings :

- suspect_beam_used suspect_less_than_nine_beams suspect_rain_likely suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 8 16 32 128 256 512 1024 2048 4096 8192 131072 262144 524288 16777216 33554432 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 3809361848

- comment :

- Quality flag for significant wave height from KaRIn in swh_karin_qual variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - swh_karin_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- 1-sigma uncertainty on significant wave height from KaRIn

- units :

- m

- valid_min :

- 0

- valid_max :

- 25000

- comment :

- 1-sigma uncertainty on significant wave height from KaRIn.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sig0_karin(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- normalized radar cross section (sigma0) from KaRIn

- standard_name :

- surface_backwards_scattering_coefficient_of_radar_wave

- units :

- 1

- quality_flag :

- sig0_karin_qual

- valid_min :

- -1000.0

- valid_max :

- 10000000.0

- comment :

- Normalized radar cross section (sigma0) from KaRIn in real, linear units (not decibels). The value may be negative due to noise subtraction. The value is corrected for instrument calibration and atmospheric attenuation. Radiometer measurements provide the atmospheric attenuation (sig0_cor_atmos_rad).

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sig0_karin_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for sigma0 from KaRIn.

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_nrcs_delta suspect_large_nrcs_std suspect_large_nrcs_window_std suspect_beam_used suspect_less_than_nine_beams suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_media_attenuation_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_radiometer_media_attenuation_missing bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 128 256 512 1024 2048 4096 8192 65536 131072 262144 524288 16777216 33554432 268435456 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 4077862815

- comment :

- Quality flag for sigma0 from KaRIn in sig0_karin_qual variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sig0_karin_uncert(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- 1-sigma uncertainty on sigma0 from KaRIn

- units :

- 1

- valid_min :

- 0.0

- valid_max :

- 1000.0

- comment :

- 1-sigma uncertainty on sigma0 from KaRIn.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sig0_karin_2(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- normalized radar cross section (sigma0) from KaRIn

- standard_name :

- surface_backwards_scattering_coefficient_of_radar_wave

- units :

- 1

- quality_flag :

- sig0_karin_2_qual

- valid_min :

- -1000.0

- valid_max :

- 10000000.0

- comment :

- Normalized radar cross section (sigma0) from KaRIn in real, linear units (not decibels). The value may be negative due to noise subtraction. The value is corrected for instrument calibration and atmospheric attenuation. A meteorological model provides the atmospheric attenuation (sig0_cor_atmos_model).

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sig0_karin_2_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for sigma0 from KaRIn.

- standard_name :

- status_flag

- flag_meanings :

- suspect_large_nrcs_delta suspect_large_nrcs_std suspect_large_nrcs_window_std suspect_beam_used suspect_less_than_nine_beams suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_media_attenuation_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 1 2 4 8 16 128 256 512 1024 2048 4096 8192 65536 131072 262144 524288 16777216 33554432 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 3809427359

- comment :

- Quality flag for sigma0 from KaRIn in sig0_karin_2 variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_karin(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- wind speed from KaRIn

- standard_name :

- wind_speed

- units :

- m/s

- quality_flag :

- wind_speed_karin_qual

- valid_min :

- 0

- valid_max :

- 65000

- comment :

- Wind speed from KaRIn computed from sig0_karin.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_karin_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for wind speed from KaRIn.

- standard_name :

- status_flag

- flag_meanings :

- suspect_beam_used suspect_less_than_nine_beams suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_media_attenuation_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_radiometer_media_attenuation_missing bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 8 16 128 256 512 1024 2048 4096 8192 65536 131072 262144 524288 16777216 33554432 268435456 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 4077862808

- comment :

- Quality flag for wind speed from KaRIn in wind_speed_karin variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_karin_2(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- wind speed from KaRIn

- standard_name :

- wind_speed

- units :

- m/s

- quality_flag :

- wind_speed_karin_2_qual

- valid_min :

- 0

- valid_max :

- 65000

- comment :

- Wind speed from KaRIn computed from sig0_karin_2.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_karin_2_qual(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for wind speed from KaRIn.

- standard_name :

- status_flag

- flag_meanings :

- suspect_beam_used suspect_less_than_nine_beams suspect_pixel_used suspect_num_pt_avg suspect_karin_telem suspect_orbit_control suspect_sc_event_flag suspect_tvp_qual suspect_volumetric_corr degraded_media_attenuation_missing degraded_beam_used degraded_large_attitude degraded_karin_ifft_overflow bad_karin_telem bad_very_large_attitude bad_outside_of_range degraded bad_not_usable

- flag_masks :

- [ 8 16 128 256 512 1024 2048 4096 8192 65536 131072 262144 524288 16777216 33554432 536870912 1073741824 2147483648]

- valid_min :

- 0

- valid_max :

- 3809427352

- comment :

- Quality flag for wind speed from KaRIn in wind_speed_karin_2 variable.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - num_pt_avg(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- number of samples averaged

- units :

- 1

- valid_min :

- 0

- valid_max :

- 289

- comment :

- Number of native unsmoothed, beam-combined KaRIn samples averaged.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - swh_wind_speed_karin_source(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for significant wave height information used to compute wind speed from KaRIn

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of significant wave height information that was used to compute the wind speed estimate from KaRIn data in wind_speed_karin.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - swh_wind_speed_karin_source_2(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for significant wave height information used to compute wind speed from KaRIn

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of significant wave height information that was used to compute the wind speed estimate from KaRIn data in wind_speed_karin_2.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - swh_nadir_altimeter(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- significant wave height from nadir altimeter

- standard_name :

- sea_surface_wave_significant_height

- units :

- m

- valid_min :

- 0

- valid_max :

- 15000

- comment :

- Significant wave height from nadir altimeter.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - swh_model(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- significant wave height from wave model

- standard_name :

- sea_surface_wave_significant_height

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- m

- valid_min :

- 0

- valid_max :

- 15000

- comment :

- Significant wave height from model.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_wave_direction(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean sea surface wave direction

- source :

- Meteo France Wave Model (MF-WAM)

- institution :

- Meteo France

- units :

- degree

- valid_min :

- 0

- valid_max :

- 36000

- comment :

- Mean sea surface wave direction.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_wave_period_t02(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- t02 mean wave period

- standard_name :

- sea_surface_wind_wave_mean_period_from_variance_spectral_density_second_frequency_moment

- source :

- Meteo France Wave Model (MF-WAM)

- institution :

- Meteo France

- units :

- s

- valid_min :

- 0

- valid_max :

- 10000

- comment :

- Sea surface wind wave mean period from model spectral density second moment.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_model_u(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- u component of model wind

- standard_name :

- eastward_wind

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- m/s

- valid_min :

- -30000

- valid_max :

- 30000

- comment :

- Eastward component of the atmospheric model wind vector at 10 meters.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_model_v(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- v component of model wind

- standard_name :

- northward_wind

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- m/s

- valid_min :

- -30000

- valid_max :

- 30000

- comment :

- Northward component of the atmospheric model wind vector at 10 meters.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - wind_speed_rad(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- wind speed from radiometer

- standard_name :

- wind_speed

- source :

- Advanced Microwave Radiometer

- units :

- m/s

- valid_min :

- 0

- valid_max :

- 65000

- comment :

- Wind speed from radiometer measurements.

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - distance_to_coast(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- distance to coast

- source :

- MODIS/GlobCover

- institution :

- European Space Agency

- units :

- m

- valid_min :

- -21000

- valid_max :

- 21000

- comment :

- Approximate distance to the nearest coast point along the Earth surface.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - heading_to_coast(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- heading to coast

- units :

- degrees

- valid_min :

- 0

- valid_max :

- 35999

- comment :

- Approximate compass heading (0-360 degrees with respect to true north) to the nearest coast point.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ancillary_surface_classification_flag(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- surface classification

- standard_name :

- status_flag

- source :

- MODIS/GlobCover

- institution :

- European Space Agency

- flag_meanings :

- open_ocean land continental_water aquatic_vegetation continental_ice_snow floating_ice salted_basin

- flag_values :

- [0 1 2 3 4 5 6]

- valid_min :

- 0

- valid_max :

- 6

- comment :

- 7-state surface type classification computed from a mask built with MODIS and GlobCover data.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - dynamic_ice_flag(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- dynamic ice flag

- standard_name :

- status_flag

- source :

- EUMETSAT Ocean and Sea Ice Satellite Applications Facility

- institution :

- EUMETSAT

- flag_meanings :

- no_ice probable_ice ice no_data

- flag_values :

- [0 1 2 3]

- valid_min :

- 0

- valid_max :

- 3

- comment :

- Dynamic ice flag for the location of the KaRIn measurement.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - rain_flag(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- rain flag

- standard_name :

- status_flag

- flag_meanings :

- no_rain probable_rain rain no_data

- flag_values :

- [0 1 2 3]

- valid_min :

- 0

- valid_max :

- 3

- comment :

- Flag indicates that signal is attenuated, probably from rain.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - rad_surface_type_flag(num_lines, num_sides)float32dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- radiometer surface type flag

- standard_name :

- status_flag

- source :

- Advanced Microwave Radiometer

- flag_meanings :

- open_ocean coastal_ocean land

- flag_values :

- [0 1 2]

- valid_min :

- 0

- valid_max :

- 2

- comment :

- Flag indicating the validity and type of processing applied to generate the wet troposphere correction (rad_wet_tropo_cor). A value of 0 indicates that open ocean processing is used, a value of 1 indicates coastal processing, and a value of 2 indicates that rad_wet_tropo_cor is invalid due to land contamination.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sc_altitude(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- altitude of KMSF origin

- standard_name :

- height_above_reference_ellipsoid

- units :

- m

- quality_flag :

- orbit_qual

- valid_min :

- 0

- valid_max :

- 2000000000

- comment :

- Altitude of the KMSF origin.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - orbit_alt_rate(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- orbital altitude rate with respect to mean sea surface

- units :

- m/s

- valid_min :

- -3500

- valid_max :

- 3500

- comment :

- Orbital altitude rate with respect to the mean sea surface.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - cross_track_angle(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- cross-track angle from true north

- units :

- degrees

- valid_min :

- 0

- valid_max :

- 359999999

- comment :

- Angle with respect to true north of the cross-track direction to the right of the spacecraft velocity vector.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sc_roll(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- roll of the spacecraft

- standard_name :

- platform_roll_angle

- units :

- degrees

- quality_flag :

- orbit_qual

- valid_min :

- -1799999

- valid_max :

- 1800000

- comment :

- KMSF attitude roll angle; positive values move the +y antenna down.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sc_pitch(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- pitch of the spacecraft

- standard_name :

- platform_pitch_angle

- units :

- degrees

- quality_flag :

- orbit_qual

- valid_min :

- -1799999

- valid_max :

- 1800000

- comment :

- KMSF attitude pitch angle; positive values move the KMSF +x axis up.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sc_yaw(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- yaw of the spacecraft

- standard_name :

- platform_yaw_angle

- units :

- degrees

- quality_flag :

- orbit_qual

- valid_min :

- -1799999

- valid_max :

- 1800000

- comment :

- KMSF attitude yaw angle relative to the nadir track. The yaw angle is a right-handed rotation about the nadir (downward) direction. A yaw value of 0 deg indicates that the KMSF +x axis is aligned with the horizontal component of the Earth-relative velocity vector. A yaw value of 180 deg indicates that the spacecraft is in a yaw-flipped state, with the KMSF -x axis aligned with the horizontal component of the Earth-relative velocity vector.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - velocity_heading(num_lines)float64dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- heading of the spacecraft Earth-relative velocity vector

- units :

- degrees

- quality_flag :

- orbit_qual

- valid_min :

- 0

- valid_max :

- 359999999

- comment :

- Angle with respect to true north of the horizontal component of the spacecraft Earth-relative velocity vector. A value of 90 deg indicates that the spacecraft velocity vector pointed due east. Values between 0 and 90 deg indicate that the velocity vector has a northward component, and values between 90 and 180 deg indicate that the velocity vector has a southward component.

Array Chunk Bytes 770.78 kiB 77.08 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - orbit_qual(num_lines)float32dask.array<chunksize=(9866,), meta=np.ndarray>

- long_name :

- orbit quality flag

- standard_name :

- status_flag

- flag_meanings :

- good orbit_estimated_during_a_maneuver orbit_interpolated_over_data_gap orbit_extrapolated_for_a_duration_less_than_1_day orbit_extrapolated_for_a_duration_between_1_to_2_days orbit_extrapolated_for_a_duration_greater_than_2_days bad_attitude

- flag_values :

- [ 0 4 5 6 7 8 64]

- valid_min :

- 0

- valid_max :

- 64

- comment :

- Flag indicating the quality of the reconstructed attitude and orbit ephemeris. A value of 0 indicates the reconstructed attitude and orbit ephemeris are both good. Non-zero values less than 64 indicate that the reconstructed attitude is good but there are issues that degrade the quality of the orbit ephemeris. A value of 64 indicates that the reconstructed attitude is degraded or bad.

Array Chunk Bytes 385.39 kiB 38.54 kiB Shape (98660,) (9866,) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - latitude_avg_ssh(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- weighted average latitude of samples used to compute SSH

- standard_name :

- latitude

- units :

- degrees_north

- valid_min :

- -80000000

- valid_max :

- 80000000

- comment :

- Latitude of measurement [-80,80]. Positive latitude is North latitude, negative latitude is South latitude. This value may be biased away from a nominal grid location if some of the native, unsmoothed samples were discarded during processing.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - longitude_avg_ssh(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- weighted average longitude of samples used to compute SSH

- standard_name :

- longitude

- units :

- degrees_east

- valid_min :

- 0

- valid_max :

- 359999999

- comment :

- Longitude of measurement. East longitude relative to Greenwich meridian. This value may be biased away from a nominal grid location if some of the native, unsmoothed samples were discarded during processing.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - cross_track_distance(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- cross track distance

- units :

- m

- valid_min :

- -75000.0

- valid_max :

- 75000.0

- comment :

- Distance of sample from nadir. Negative values indicate the left side of the swath, and positive values indicate the right side of the swath.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - x_factor(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- radiometric calibration X factor as a composite value for the X factors of the +y and -y channels

- units :

- 1

- valid_min :

- 0.0

- valid_max :

- 1e+20

- comment :

- Radiometric calibration X factor as a linear power ratio.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sig0_cor_atmos_model(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- two-way atmospheric correction to sigma0 from model

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- 1

- quality_flag :

- sig0_karin_2_qual

- valid_min :

- 1.0

- valid_max :

- 10.0

- comment :

- Atmospheric correction to sigma0 from weather model data as a linear power multiplier (not decibels). sig0_cor_atmos_model is already applied in computing sig0_karin_2.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - sig0_cor_atmos_rad(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- two-way atmospheric correction to sigma0 from radiometer data

- source :

- Advanced Microwave Radiometer

- units :

- 1

- quality_flag :

- sig0_karin_qual

- valid_min :

- 1.0

- valid_max :

- 10.0

- comment :

- Atmospheric correction to sigma0 from radiometer data as a linear power multiplier (not decibels). sig0_cor_atmos_rad is already applied in computing sig0_karin.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - doppler_centroid(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- doppler centroid estimated by KaRIn

- units :

- 1/s

- valid_min :

- -30000

- valid_max :

- 30000

- comment :

- Doppler centroid (in hertz or cycles per second) estimated by KaRIn.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - phase_bias_ref_surface(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- height of reference surface used for phase bias calculation

- units :

- m

- valid_min :

- -15000000

- valid_max :

- 150000000

- comment :

- Height (relative to the reference ellipsoid) of the reference surface used for phase bias calculation during L1B processing.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - obp_ref_surface(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- height of reference surface used by on-board-processor

- units :

- m

- valid_min :

- -15000000

- valid_max :

- 150000000

- comment :

- Height (relative to the reference ellipsoid) of the reference surface used by the KaRIn on-board processor.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_tmb_187(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- radiometer main beam brightness temperature at 18.7 GHz

- standard_name :

- toa_brightness_temperature

- source :

- Advanced Microwave Radiometer

- units :

- K

- valid_min :

- 13000

- valid_max :

- 25000

- comment :

- Main beam brightness temperature measurement at 18.7 GHz. Value is unsmoothed (along-track averaging has not been performed).

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_tmb_238(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- radiometer main beam brightness temperature at 23.8 GHz

- standard_name :

- toa_brightness_temperature

- source :

- Advanced Microwave Radiometer

- units :

- K

- valid_min :

- 13000

- valid_max :

- 25000

- comment :

- Main beam brightness temperature measurement at 23.8 GHz. Value is unsmoothed (along-track averaging has not been performed).

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_tmb_340(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- radiometer main beam brightness temperature at 34.0 GHz

- standard_name :

- toa_brightness_temperature

- source :

- Advanced Microwave Radiometer

- units :

- K

- valid_min :

- 15000

- valid_max :

- 28000

- comment :

- Main beam brightness temperature measurement at 34.0 GHz. Value is unsmoothed (along-track averaging has not been performed).

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_water_vapor(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- water vapor content from radiometer

- standard_name :

- atmosphere_water_vapor_content

- source :

- Advanced Microwave Radiometer

- units :

- kg/m^2

- valid_min :

- 0

- valid_max :

- 15000

- comment :

- Integrated water vapor content from radiometer measurements.

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_cloud_liquid_water(num_lines, num_sides)float64dask.array<chunksize=(9866, 2), meta=np.ndarray>

- long_name :

- liquid water content from radiometer

- standard_name :

- atmosphere_cloud_liquid_water_content

- source :

- Advanced Microwave Radiometer

- units :

- kg/m^2

- valid_min :

- 0

- valid_max :

- 2000

- comment :

- Integrated cloud liquid water content from radiometer measurements.

Array Chunk Bytes 1.51 MiB 154.16 kiB Shape (98660, 2) (9866, 2) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_sea_surface_cnescls(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean sea surface height (CNES/CLS)

- source :

- CNES_CLS_2022

- institution :

- CNES/CLS

- units :

- m

- valid_min :

- -1500000

- valid_max :

- 1500000

- comment :

- Mean sea surface height above the reference ellipsoid. The value is referenced to the mean tide system, i.e. includes the permanent tide (zero frequency).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_sea_surface_cnescls_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean sea surface height accuracy (CNES/CLS)

- source :

- CNES_CLS_2022

- institution :

- CNES/CLS

- units :

- m

- valid_min :

- 0

- valid_max :

- 10000

- comment :

- Accuracy of the mean sea surface height (mean_sea_surface_cnescls).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_sea_surface_dtu(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean sea surface height (DTU)

- source :

- DTU18

- institution :

- DTU

- units :

- m

- valid_min :

- -1500000

- valid_max :

- 1500000

- comment :

- Mean sea surface height above the reference ellipsoid. The value is referenced to the mean tide system, i.e. includes the permanent tide (zero frequency).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_sea_surface_dtu_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean sea surface height accuracy (DTU)

- source :

- DTU18

- institution :

- DTU

- units :

- m

- valid_min :

- 0

- valid_max :

- 10000

- comment :

- Accuracy of the mean sea surface height (mean_sea_surface_dtu)

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - geoid(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geoid height

- standard_name :

- geoid_height_above_reference_ellipsoid

- source :

- EGM2008 (Pavlis et al., 2012)

- units :

- m

- valid_min :

- -1500000

- valid_max :

- 1500000

- comment :

- Geoid height above the reference ellipsoid with a correction to refer the value to the mean tide system, i.e. includes the permanent tide (zero frequency).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_dynamic_topography(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean dynamic topography

- source :

- CNES_CLS_2022

- institution :

- CNES/CLS

- units :

- m

- valid_min :

- -30000

- valid_max :

- 30000

- comment :

- Mean dynamic topography above the geoid.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - mean_dynamic_topography_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- mean dynamic topography accuracy

- source :

- CNES_CLS_2022

- institution :

- CNES/CLS

- units :

- m

- valid_min :

- 0

- valid_max :

- 10000

- comment :

- Accuracy of the mean dynamic topography.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - depth_or_elevation(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- ocean depth or land elevation

- source :

- Altimeter Corrected Elevations, version 2

- institution :

- European Space Agency

- units :

- m

- valid_min :

- -12000

- valid_max :

- 10000

- comment :

- Ocean depth or land elevation above reference ellipsoid. Ocean depth (bathymetry) is given as negative values, and land elevation positive values.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - solid_earth_tide(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- solid Earth tide height

- source :

- Cartwright and Taylor (1971) and Cartwright and Edden (1973)

- units :

- m

- valid_min :

- -10000

- valid_max :

- 10000

- comment :

- Solid-Earth (body) tide height. The zero-frequency permanent tide component is not included.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ocean_tide_fes(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geocentric ocean tide height (FES)

- source :

- FES2014b (Carrere et al., 2016)

- institution :

- LEGOS/CNES

- units :

- m

- valid_min :

- -300000

- valid_max :

- 300000

- comment :

- Geocentric ocean tide height. Includes the sum total of the ocean tide, the corresponding load tide (load_tide_fes) and equilibrium long-period ocean tide height (ocean_tide_eq).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ocean_tide_got(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geocentric ocean tide height (GOT)

- source :

- GOT4.10c (Ray, 2013)

- institution :

- GSFC

- units :

- m

- valid_min :

- -300000

- valid_max :

- 300000

- comment :

- Geocentric ocean tide height. Includes the sum total of the ocean tide, the corresponding load tide (load_tide_got) and equilibrium long-period ocean tide height (ocean_tide_eq).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - load_tide_fes(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geocentric load tide height (FES)

- source :

- FES2014b (Carrere et al., 2016)

- institution :

- LEGOS/CNES

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Geocentric load tide height. The effect of the ocean tide loading of the Earth's crust. This value has already been added to the corresponding ocean tide height value (ocean_tide_fes).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - load_tide_got(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geocentric load tide height (GOT)

- source :

- GOT4.10c (Ray, 2013)

- institution :

- GSFC

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Geocentric load tide height. The effect of the ocean tide loading of the Earth's crust. This value has already been added to the corresponding ocean tide height value (ocean_tide_got).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ocean_tide_eq(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- equilibrium long-period ocean tide height

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Equilibrium long-period ocean tide height. This value has already been added to the corresponding ocean tide height values (ocean_tide_fes and ocean_tide_got).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ocean_tide_non_eq(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- non-equilibrium long-period ocean tide height

- source :

- FES2014b (Carrere et al., 2016)

- institution :

- LEGOS/CNES

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Non-equilibrium long-period ocean tide height. This value is reported as a relative displacement with repsect to ocean_tide_eq. This value can be added to ocean_tide_eq, ocean_tide_fes, or ocean_tide_got, or subtracted from ssha_karin and ssha_karin_2, to account for the total long-period ocean tides from equilibrium and non-equilibrium contributions.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - internal_tide_hret(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- coherent internal tide (HRET)

- source :

- Zaron (2019)

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Coherent internal ocean tide. This value is subtracted from the ssh_karin and ssh_karin_2 to compute ssha_karin and ssha_karin_2, respectively.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - internal_tide_sol2(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- coherent internal tide (Model 2)

- source :

- None

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Coherent internal tide. This value is currently always defaulted.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - pole_tide(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- geocentric pole tide height

- source :

- Wahr (1985) and Desai et al. (2015)

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Geocentric pole tide height. The total of the contribution from the solid-Earth (body) pole tide height, the ocean pole tide height, and the load pole tide height (i.e., the effect of the ocean pole tide loading of the Earth's crust).

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - dac(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- dynamic atmospheric correction

- source :

- MOG2D

- institution :

- LEGOS/CNES/CLS

- units :

- m

- valid_min :

- -12000

- valid_max :

- 12000

- comment :

- Model estimate of the effect on sea surface topography due to high frequency air pressure and wind effects and the low-frequency height from inverted barometer effect (inv_bar_cor). This value is subtracted from the ssh_karin and ssh_karin_2 to compute ssha_karin and ssha_karin_2, respectively. Use only one of inv_bar_cor and dac.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - inv_bar_cor(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- static inverse barometer effect on sea surface height

- units :

- m

- valid_min :

- -2000

- valid_max :

- 2000

- comment :

- Estimate of static effect of atmospheric pressure on sea surface height. Above average pressure lowers sea surface height. Computed by interpolating ECMWF pressure fields in space and time. The value is included in dac. To apply, add dac to ssha_karin and ssha_karin_2 and subtract inv_bar_cor.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - model_dry_tropo_cor(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- dry troposphere vertical correction

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- m

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- -30000

- valid_max :

- -15000

- comment :

- Equivalent vertical correction due to dry troposphere delay. The reported sea surface height, latitude and longitude are computed after adding negative media corrections to uncorrected range along slant-range paths, accounting for the differential delay between the two KaRIn antennas. The equivalent vertical correction is computed by applying obliquity factors to the slant-path correction. Adding the reported correction to the reported sea surface height results in the uncorrected sea surface height.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - model_wet_tropo_cor(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- wet troposphere vertical correction from weather model data

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- m

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- -10000

- valid_max :

- 0

- comment :

- Equivalent vertical correction due to wet troposphere delay from weather model data. The reported pixel height, latitude and longitude are computed after adding negative media corrections to uncorrected range along slant-range paths, accounting for the differential delay between the two KaRIn antennas. The equivalent vertical correction is computed by applying obliquity factors to the slant-path correction. Adding the reported correction to the reported sea surface height (ssh_karin_2) results in the uncorrected sea surface height.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - rad_wet_tropo_cor(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- wet troposphere vertical correction from radiometer data

- source :

- Advanced Microwave Radiometer

- units :

- m

- quality_flag :

- ssh_karin_qual

- valid_min :

- -10000

- valid_max :

- 0

- comment :

- Equivalent vertical correction due to wet troposphere delay from radiometer measurements. The reported pixel height, latitude and longitude are computed after adding negative media corrections to uncorrected range along slant-range paths, accounting for the differential delay between the two KaRIn antennas. The equivalent vertical correction is computed by applying obliquity factors to the slant-path correction. Adding the reported correction to the reported sea surface height (ssh_karin) results in the uncorrected sea surface height.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - iono_cor_gim_ka(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- ionosphere vertical correction

- source :

- Global Ionosphere Maps

- institution :

- JPL

- units :

- m

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- -5000

- valid_max :

- 0

- comment :

- Equivalent vertical correction due to ionosphere delay. The reported sea surface height, latitude and longitude are computed after adding negative media corrections to uncorrected range along slant-range paths, accounting for the differential delay between the two KaRIn antennas. The equivalent vertical correction is computed by applying obliquity factors to the slant-path correction. Adding the reported correction to the reported sea surface height results in the uncorrected sea surface height.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - height_cor_xover(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- height correction from crossover calibration

- units :

- m

- quality_flag :

- height_cor_xover_qual

- valid_min :

- -100000

- valid_max :

- 100000

- comment :

- Height correction from crossover calibration. To apply this correction the value of height_cor_xover should be added to the value of ssh_karin, ssh_karin_2, ssha_karin, and ssha_karin_2.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - height_cor_xover_qual(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- quality flag for height correction from crossover calibration

- standard_name :

- status_flag

- flag_meanings :

- good suspect bad

- flag_values :

- [0 1 2]

- valid_min :

- 0

- valid_max :

- 2

- comment :

- Flag indicating the quality of the height correction from crossover calibration. Values of 0, 1, and 2 indicate that the correction is good, suspect, and bad, respectively.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - rain_rate(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- rain rate from weather model

- source :

- European Centre for Medium-Range Weather Forecasts

- institution :

- ECMWF

- units :

- mm/hr

- valid_min :

- 0

- valid_max :

- 200

- comment :

- Rain rate from weather model.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - ice_conc(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- concentration of sea ice

- standard_name :

- sea_ice_area_fraction

- source :

- EUMETSAT Ocean and Sea Ice Satellite Applications Facility

- institution :

- EUMETSAT

- units :

- %

- valid_min :

- 0

- valid_max :

- 10000

- comment :

- Concentration of sea ice from model.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sea_state_bias_cor(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea state bias correction

- source :

- CNES

- units :

- m

- valid_min :

- -6000

- valid_max :

- 0

- comment :

- Sea state bias correction used to compute ssh_karin. Adding the reported correction to the reported sea surface height results in the uncorrected sea surface height. The wind_speed_karin value is used to compute this quantity.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - sea_state_bias_cor_2(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- sea state bias correction

- source :

- CNES

- units :

- m

- valid_min :

- -6000

- valid_max :

- 0

- comment :

- Sea state bias correction used to compute ssh_karin_2. Adding the reported correction to the reported sea surface height results in the uncorrected sea surface height. The wind_speed_karin_2 value is used to compute this quantity.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - swh_ssb_cor_source(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for significant wave height information used to compute sea state bias correction

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of significant wave height information that was used to compute the sea state bias correction in sea_state_bias_cor.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - swh_ssb_cor_source_2(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for significant wave height information used to compute sea state bias correction

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of significant wave height information that was used to compute the sea state bias correction in sea_state_bias_cor_2.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - wind_speed_ssb_cor_source(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for wind speed information used to compute sea state bias correction

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of wind speed information that was used to compute the sea state bias correction in sea_state_bias_cor.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - wind_speed_ssb_cor_source_2(num_lines, num_pixels)float32dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- source flag for wind speed information used to compute sea state bias correction

- standard_name :

- status_flag

- flag_meanings :

- nadir_altimeter karin model

- flag_masks :

- [1 2 4]

- valid_min :

- 0

- valid_max :

- 7

- comment :

- Bit flag that indicates the source of wind speed information that was used to compute the sea state bias correction in sea_state_bias_cor_2.

Array Chunk Bytes 25.97 MiB 2.60 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float32 numpy.ndarray - volumetric_correlation(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- volumetric correlation

- units :

- 1

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- 0

- valid_max :

- 20000

- comment :

- Volumetric correlation.

Array Chunk Bytes 51.94 MiB 5.19 MiB Shape (98660, 69) (9866, 69) Dask graph 10 chunks in 21 graph layers Data type float64 numpy.ndarray - volumetric_correlation_uncert(num_lines, num_pixels)float64dask.array<chunksize=(9866, 69), meta=np.ndarray>

- long_name :

- volumetric correlation standard deviation

- units :

- 1

- quality_flag :

- ssh_karin_2_qual

- valid_min :

- 0

- valid_max :

- 10000

- comment :