array(['2015-12-26T12:00:00.000000000', '2015-12-27T12:00:00.000000000',

'2015-12-28T12:00:00.000000000', '2015-12-29T12:00:00.000000000',

'2015-12-30T12:00:00.000000000', '2015-12-31T12:00:00.000000000',

'2016-01-01T12:00:00.000000000', '2016-01-02T12:00:00.000000000',

'2016-01-03T12:00:00.000000000', '2016-01-04T12:00:00.000000000',

'2016-01-05T12:00:00.000000000', '2016-01-06T12:00:00.000000000',

'2016-01-07T12:00:00.000000000', '2016-01-08T12:00:00.000000000',

'2016-01-09T12:00:00.000000000', '2016-01-10T12:00:00.000000000',

'2016-01-11T12:00:00.000000000', '2016-01-12T12:00:00.000000000',

'2016-01-13T12:00:00.000000000', '2016-01-14T12:00:00.000000000',

'2016-01-15T12:00:00.000000000', '2016-01-16T12:00:00.000000000',

'2016-12-26T12:00:00.000000000', '2016-12-27T12:00:00.000000000',

'2016-12-28T12:00:00.000000000', '2016-12-29T12:00:00.000000000',

'2016-12-30T12:00:00.000000000', '2016-12-31T12:00:00.000000000',

'2017-01-01T12:00:00.000000000', '2017-01-02T12:00:00.000000000',

'2017-01-03T12:00:00.000000000', '2017-01-04T12:00:00.000000000',

'2017-01-05T12:00:00.000000000', '2017-01-06T12:00:00.000000000',

'2017-01-07T12:00:00.000000000', '2017-01-08T12:00:00.000000000',

'2017-01-09T12:00:00.000000000', '2017-01-10T12:00:00.000000000',

'2017-01-11T12:00:00.000000000', '2017-01-12T12:00:00.000000000',

'2017-01-13T12:00:00.000000000', '2017-01-14T12:00:00.000000000',

'2017-01-15T12:00:00.000000000', '2017-01-16T12:00:00.000000000',

'2017-12-26T12:00:00.000000000', '2017-12-27T12:00:00.000000000',

'2017-12-28T12:00:00.000000000', '2017-12-29T12:00:00.000000000',

'2017-12-30T12:00:00.000000000', '2017-12-31T12:00:00.000000000',

'2018-01-01T12:00:00.000000000', '2018-01-02T12:00:00.000000000',

'2018-01-03T12:00:00.000000000', '2018-01-04T12:00:00.000000000',

'2018-01-05T12:00:00.000000000', '2018-01-06T12:00:00.000000000',

'2018-01-07T12:00:00.000000000', '2018-01-08T12:00:00.000000000',

'2018-01-09T12:00:00.000000000', '2018-01-10T12:00:00.000000000',

'2018-01-11T12:00:00.000000000', '2018-01-12T12:00:00.000000000',

'2018-01-13T12:00:00.000000000', '2018-01-14T12:00:00.000000000',

'2018-01-15T12:00:00.000000000', '2018-01-16T12:00:00.000000000',

'2018-12-26T12:00:00.000000000', '2018-12-27T12:00:00.000000000',

'2018-12-28T12:00:00.000000000', '2018-12-29T12:00:00.000000000',

'2018-12-30T12:00:00.000000000', '2018-12-31T12:00:00.000000000',

'2019-01-01T12:00:00.000000000', '2019-01-02T12:00:00.000000000',

'2019-01-03T12:00:00.000000000', '2019-01-04T12:00:00.000000000',

'2019-01-05T12:00:00.000000000', '2019-01-06T12:00:00.000000000',

'2019-01-07T12:00:00.000000000', '2019-01-08T12:00:00.000000000',

'2019-01-09T12:00:00.000000000', '2019-01-10T12:00:00.000000000',

'2019-01-11T12:00:00.000000000', '2019-01-12T12:00:00.000000000',

'2019-01-13T12:00:00.000000000', '2019-01-14T12:00:00.000000000',

'2019-01-15T12:00:00.000000000', '2019-01-16T12:00:00.000000000',

'2019-12-26T12:00:00.000000000', '2019-12-27T12:00:00.000000000',

'2019-12-28T12:00:00.000000000', '2019-12-29T12:00:00.000000000',

'2019-12-30T12:00:00.000000000', '2019-12-31T12:00:00.000000000',

'2020-01-01T12:00:00.000000000', '2020-01-02T12:00:00.000000000',

'2020-01-03T12:00:00.000000000', '2020-01-04T12:00:00.000000000',

'2020-01-05T12:00:00.000000000', '2020-01-06T12:00:00.000000000',

'2020-01-07T12:00:00.000000000', '2020-01-08T12:00:00.000000000',

'2020-01-09T12:00:00.000000000', '2020-01-10T12:00:00.000000000',

'2020-01-11T12:00:00.000000000', '2020-01-12T12:00:00.000000000',

'2020-01-13T12:00:00.000000000', '2020-01-14T12:00:00.000000000',

'2020-01-15T12:00:00.000000000', '2020-01-16T12:00:00.000000000',

'2020-12-26T12:00:00.000000000', '2020-12-27T12:00:00.000000000',

'2020-12-28T12:00:00.000000000', '2020-12-29T12:00:00.000000000',

'2020-12-30T12:00:00.000000000', '2020-12-31T12:00:00.000000000',

'2021-01-01T12:00:00.000000000', '2021-01-02T12:00:00.000000000',

'2021-01-03T12:00:00.000000000', '2021-01-04T12:00:00.000000000',

'2021-01-05T12:00:00.000000000', '2021-01-06T12:00:00.000000000',

'2021-01-07T12:00:00.000000000', '2021-01-08T12:00:00.000000000',

'2021-01-09T12:00:00.000000000', '2021-01-10T12:00:00.000000000',

'2021-01-11T12:00:00.000000000', '2021-01-12T12:00:00.000000000',

'2021-01-13T12:00:00.000000000', '2021-01-14T12:00:00.000000000',

'2021-01-15T12:00:00.000000000', '2021-01-16T12:00:00.000000000',

'2021-12-26T12:00:00.000000000', '2021-12-27T12:00:00.000000000',

'2021-12-28T12:00:00.000000000', '2021-12-29T12:00:00.000000000',

'2021-12-30T12:00:00.000000000', '2021-12-31T12:00:00.000000000',

'2022-01-01T12:00:00.000000000', '2022-01-02T12:00:00.000000000',

'2022-01-03T12:00:00.000000000', '2022-01-04T12:00:00.000000000',

'2022-01-05T12:00:00.000000000', '2022-01-06T12:00:00.000000000',

'2022-01-07T12:00:00.000000000', '2022-01-08T12:00:00.000000000',

'2022-01-09T12:00:00.000000000', '2022-01-10T12:00:00.000000000',

'2022-01-11T12:00:00.000000000', '2022-01-12T12:00:00.000000000',

'2022-01-13T12:00:00.000000000', '2022-01-14T12:00:00.000000000',

'2022-01-15T12:00:00.000000000', '2022-01-16T12:00:00.000000000',

'2022-12-26T12:00:00.000000000', '2022-12-27T12:00:00.000000000',

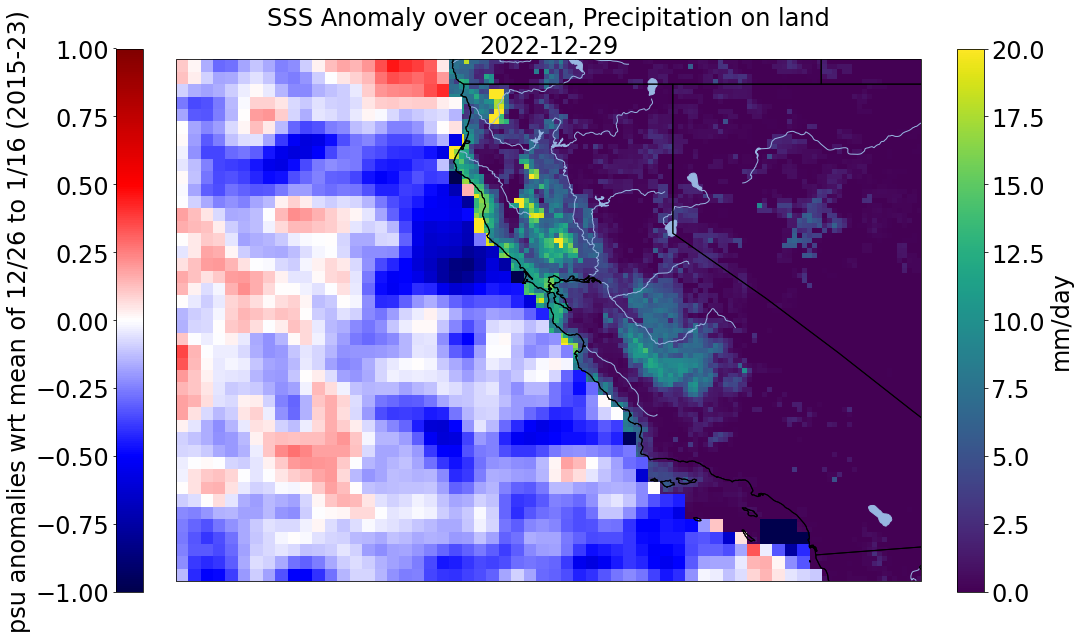

'2022-12-28T12:00:00.000000000', '2022-12-29T12:00:00.000000000',

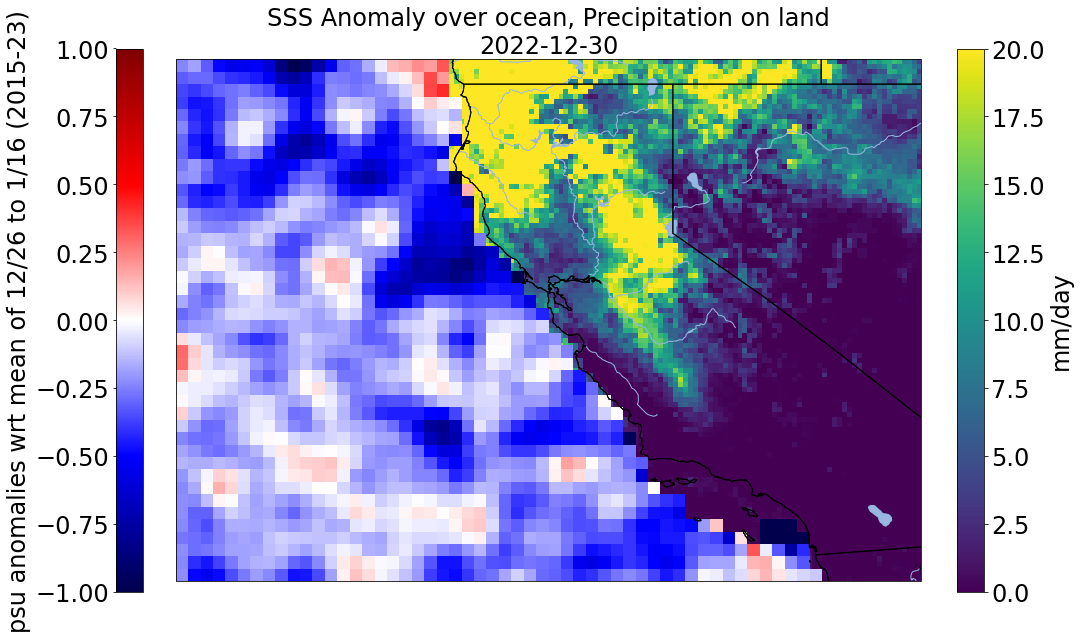

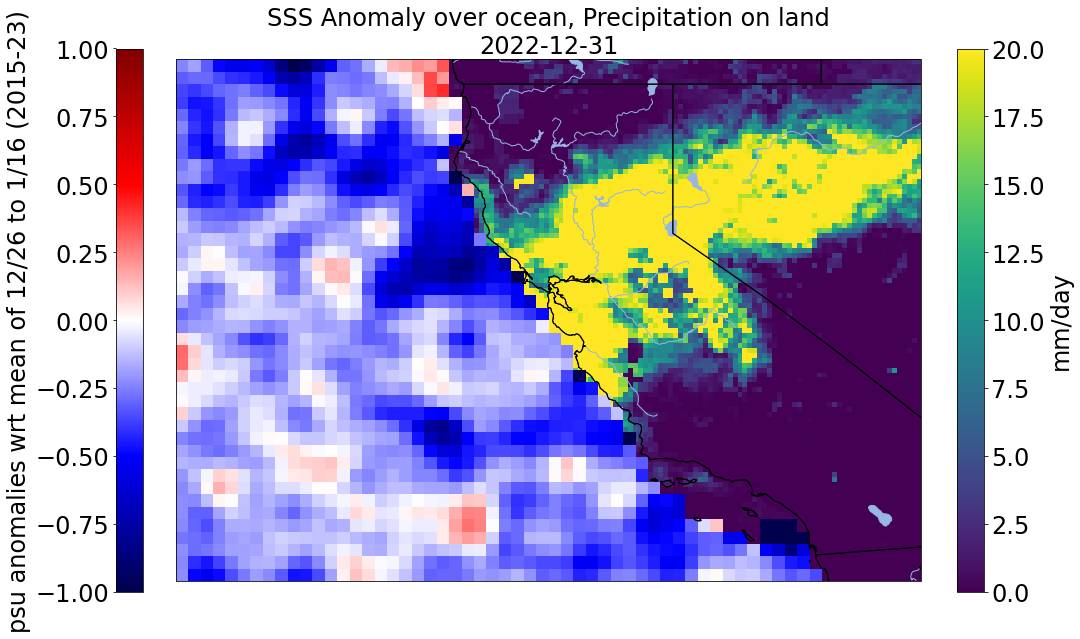

'2022-12-30T12:00:00.000000000', '2022-12-31T12:00:00.000000000',

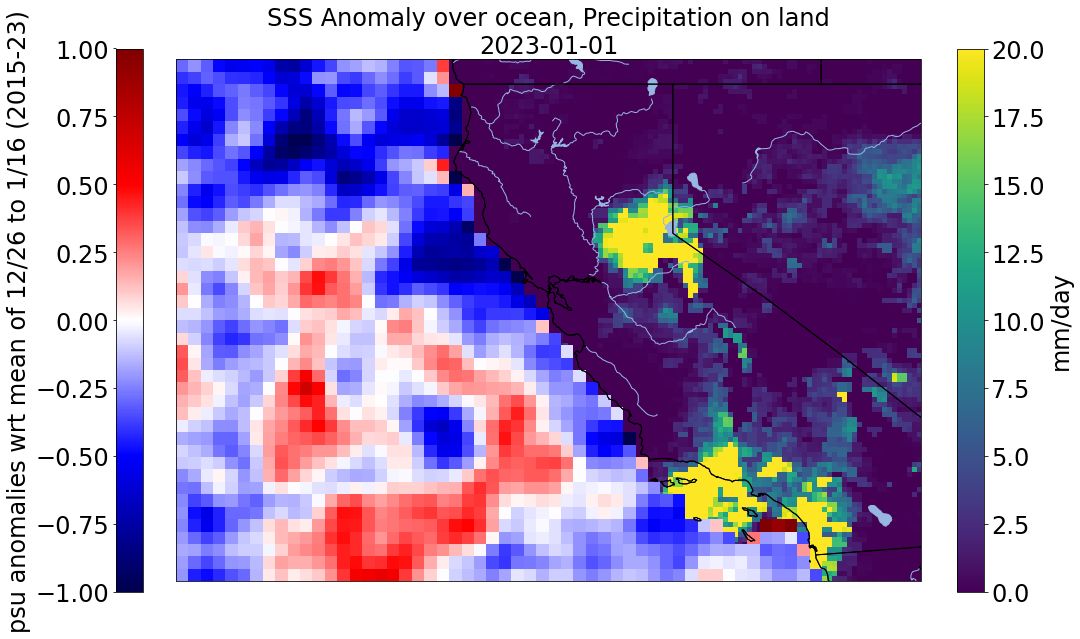

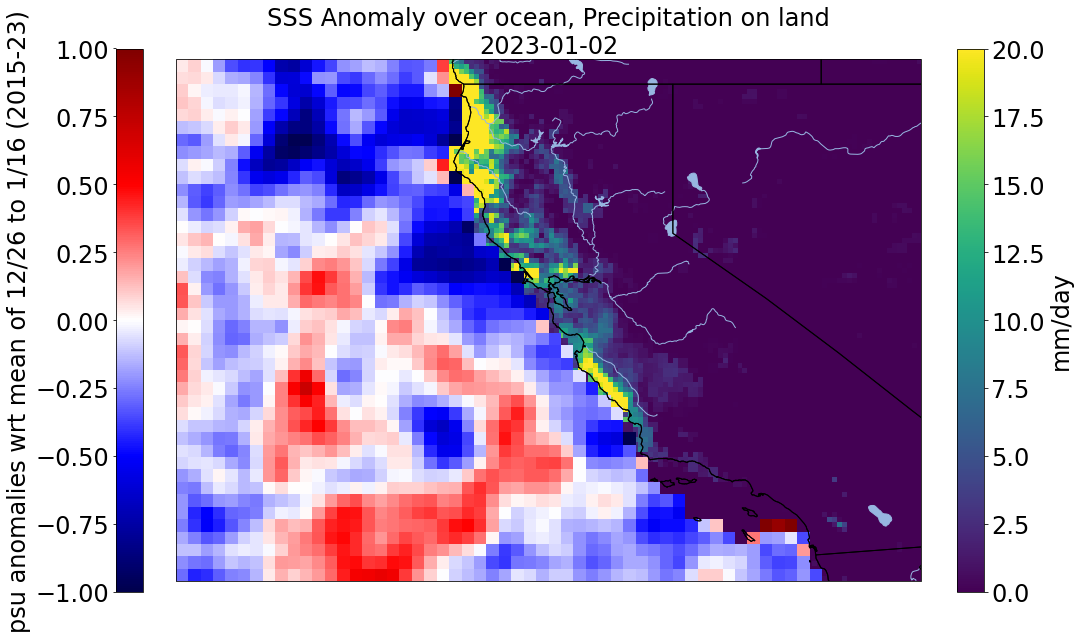

'2023-01-01T12:00:00.000000000', '2023-01-02T12:00:00.000000000',

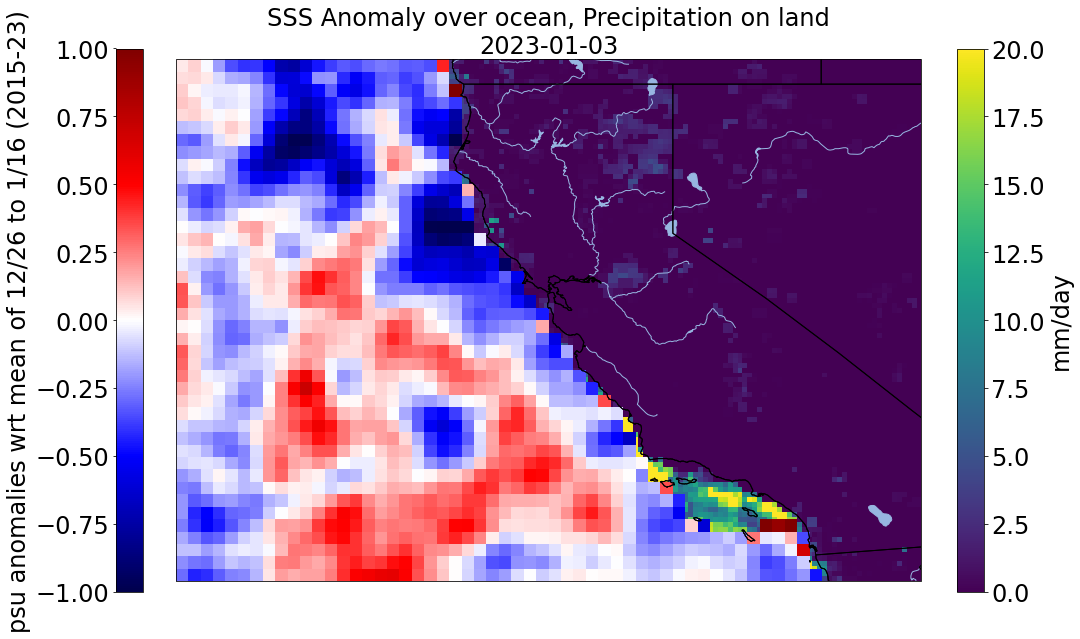

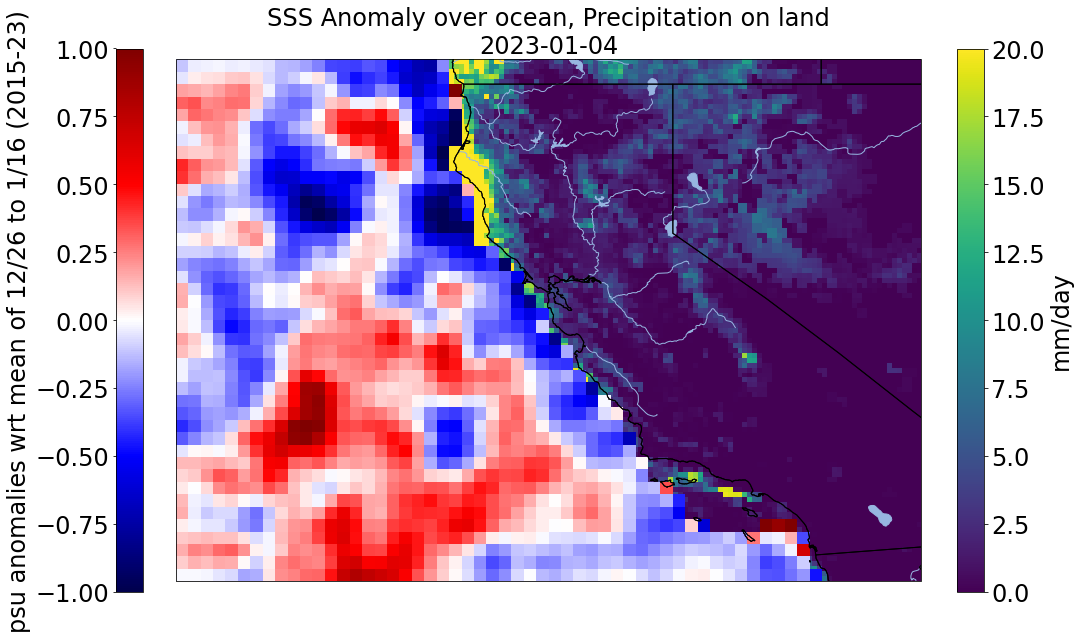

'2023-01-03T12:00:00.000000000', '2023-01-04T12:00:00.000000000',

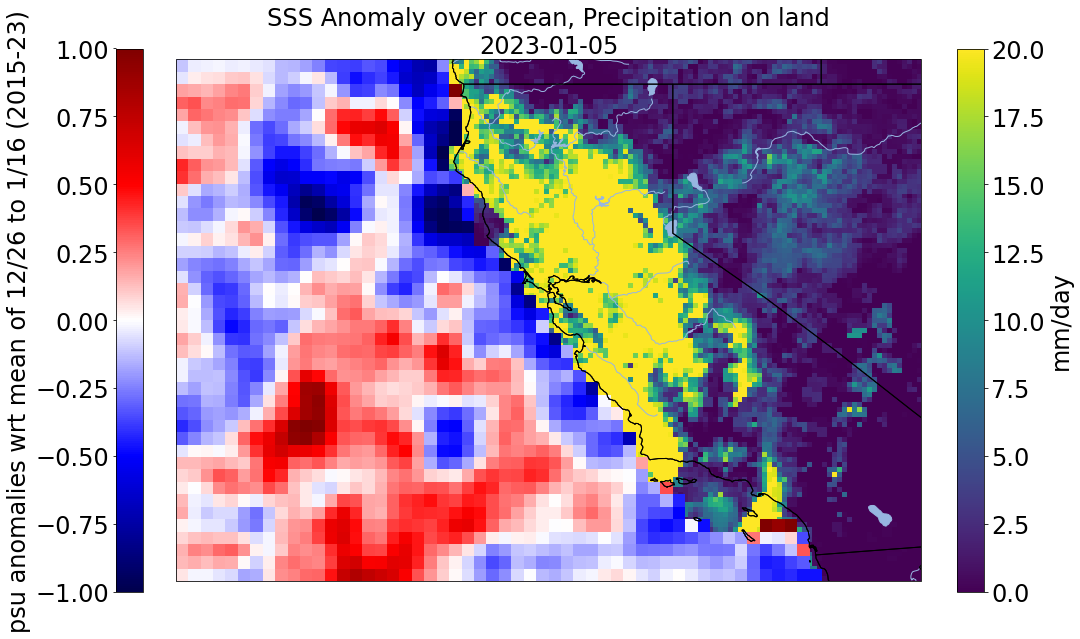

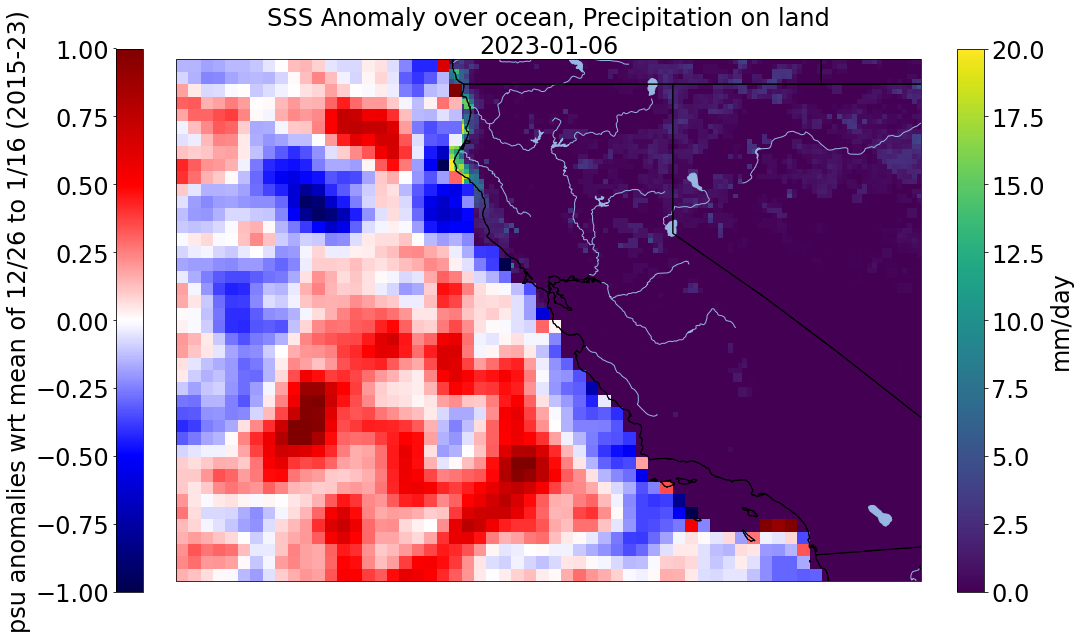

'2023-01-05T12:00:00.000000000', '2023-01-06T12:00:00.000000000',

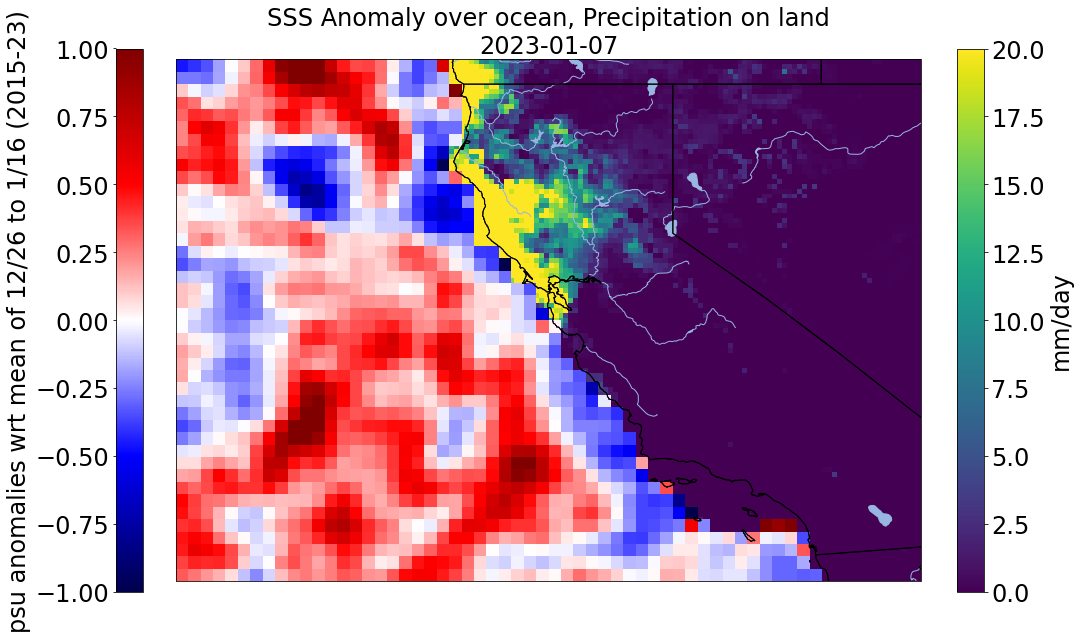

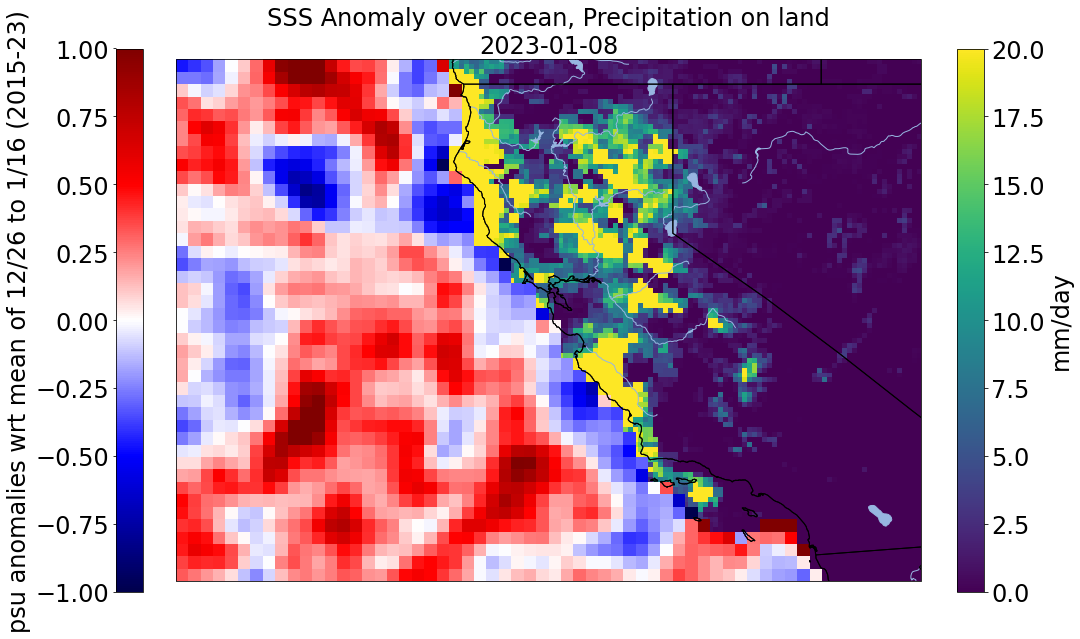

'2023-01-07T12:00:00.000000000', '2023-01-08T12:00:00.000000000',

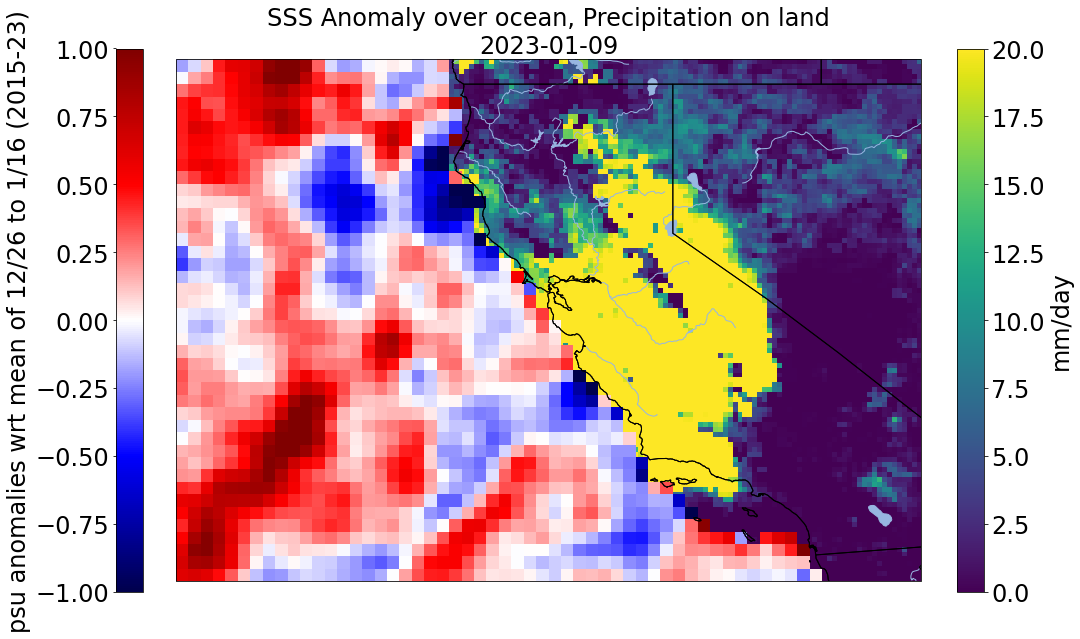

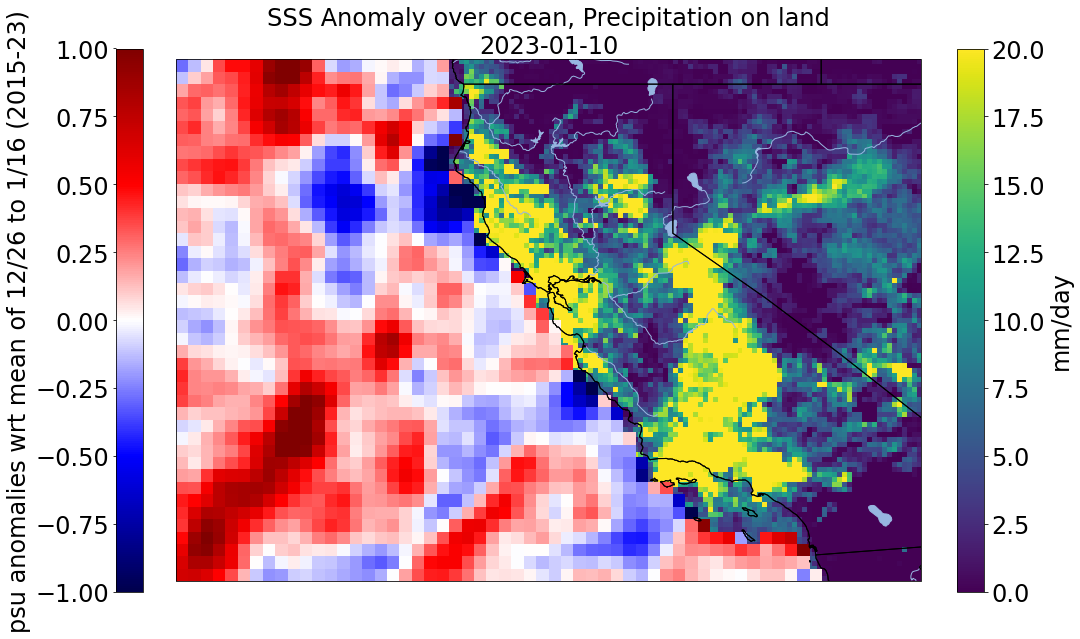

'2023-01-09T12:00:00.000000000', '2023-01-10T12:00:00.000000000',

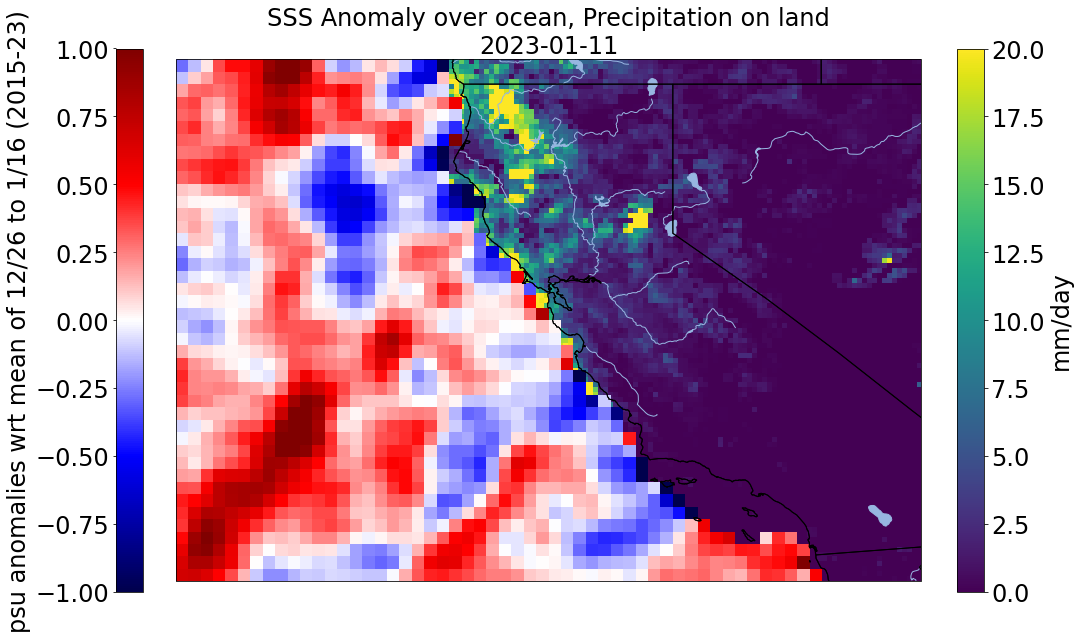

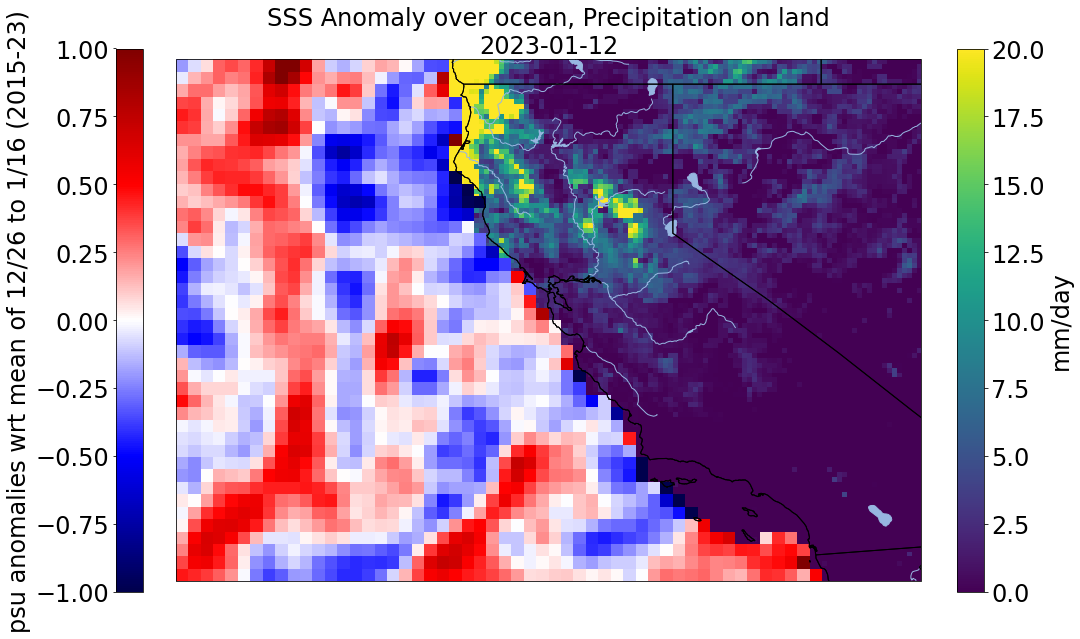

'2023-01-11T12:00:00.000000000', '2023-01-12T12:00:00.000000000',

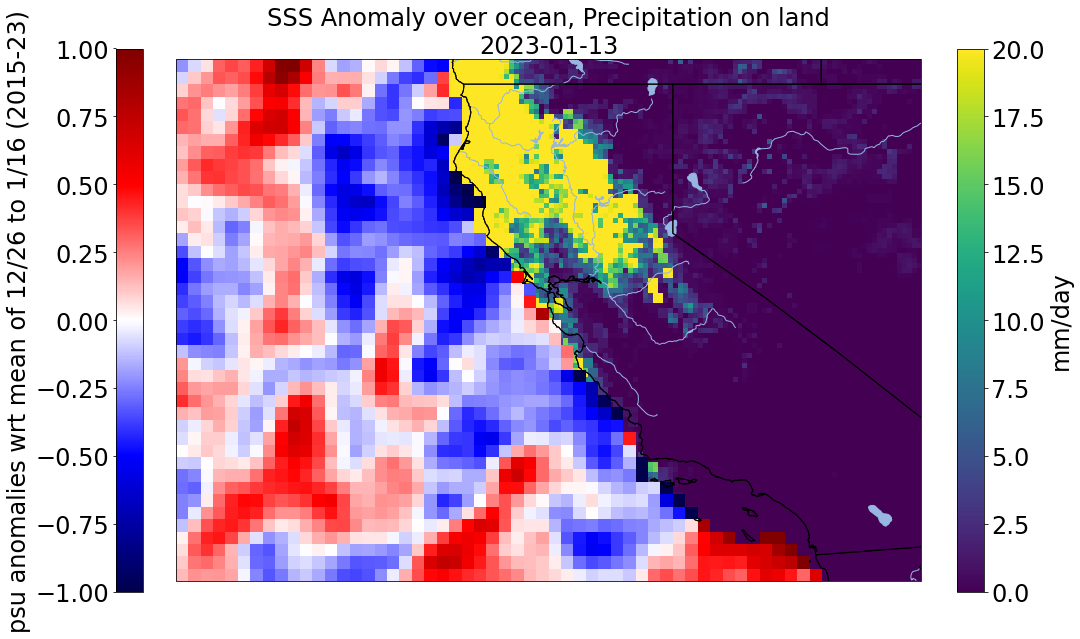

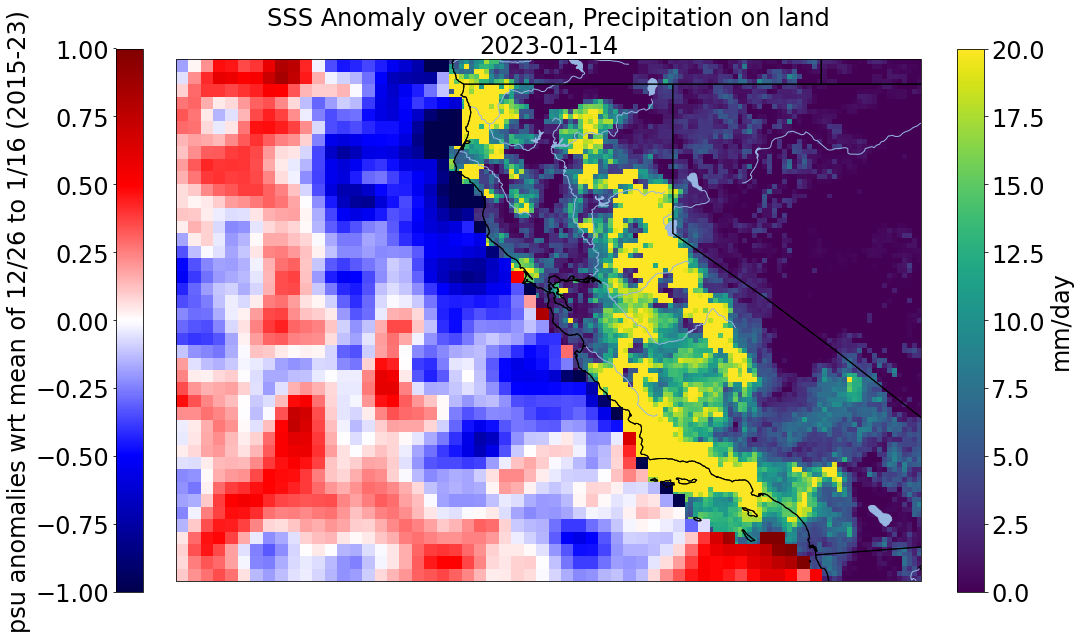

'2023-01-13T12:00:00.000000000', '2023-01-14T12:00:00.000000000',

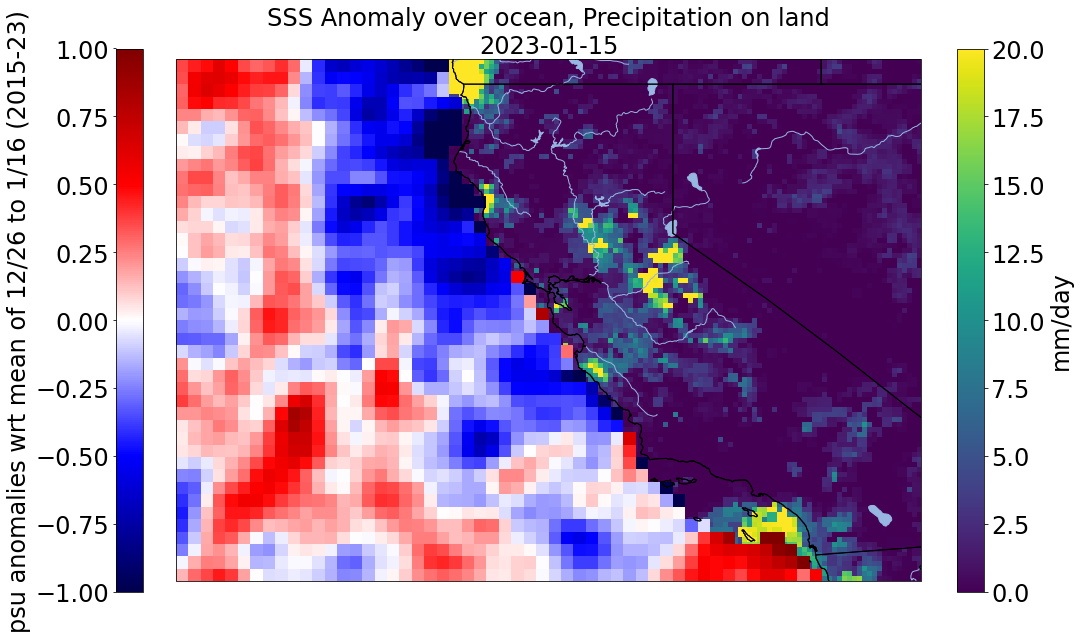

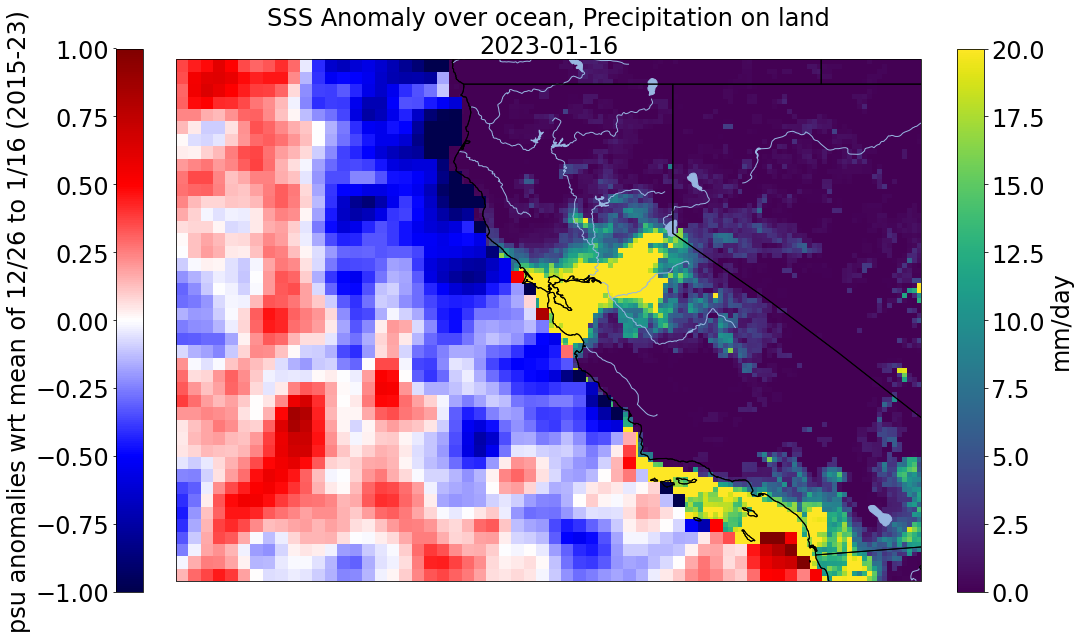

'2023-01-15T12:00:00.000000000', '2023-01-16T12:00:00.000000000'],

dtype='datetime64[ns]')